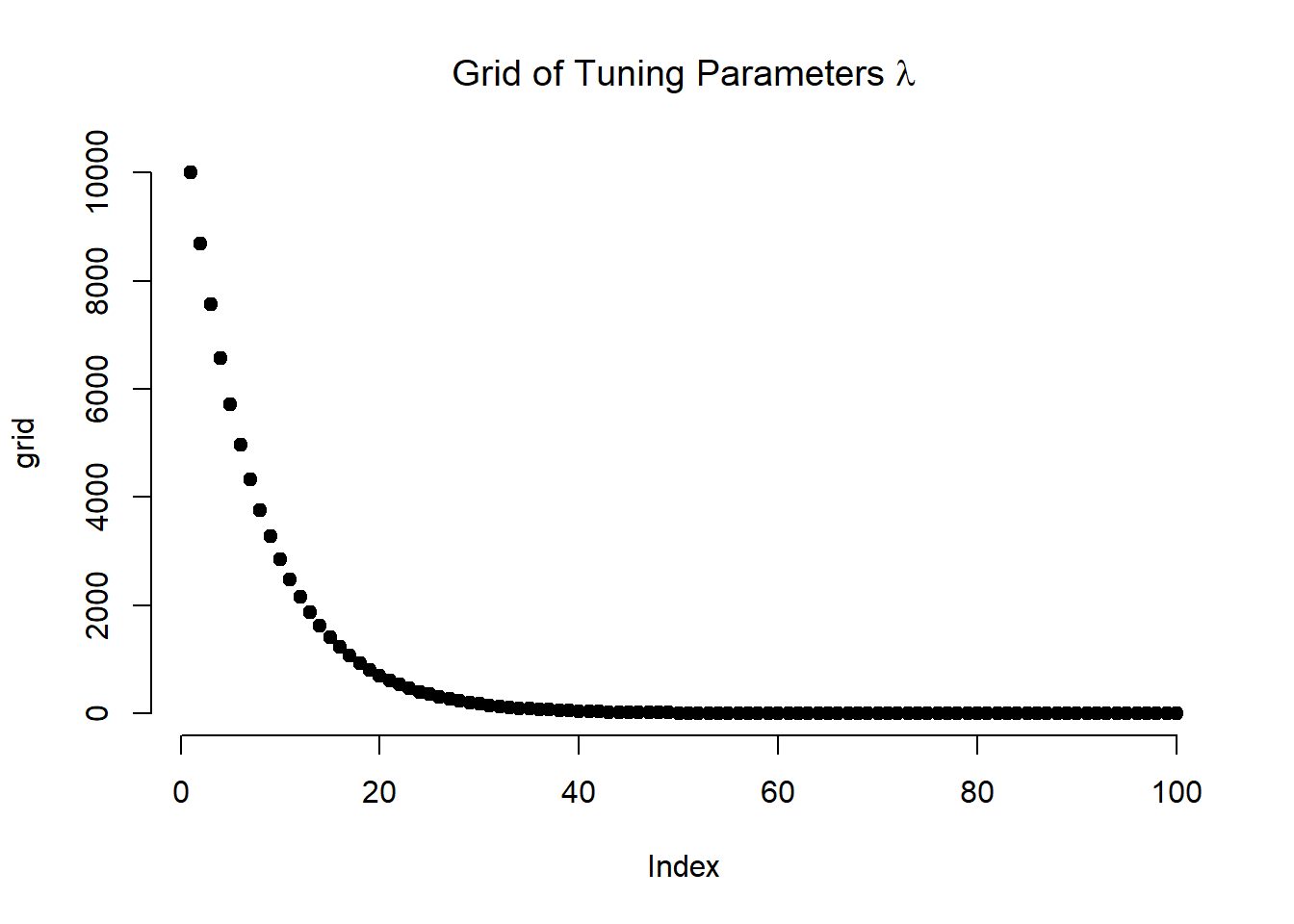

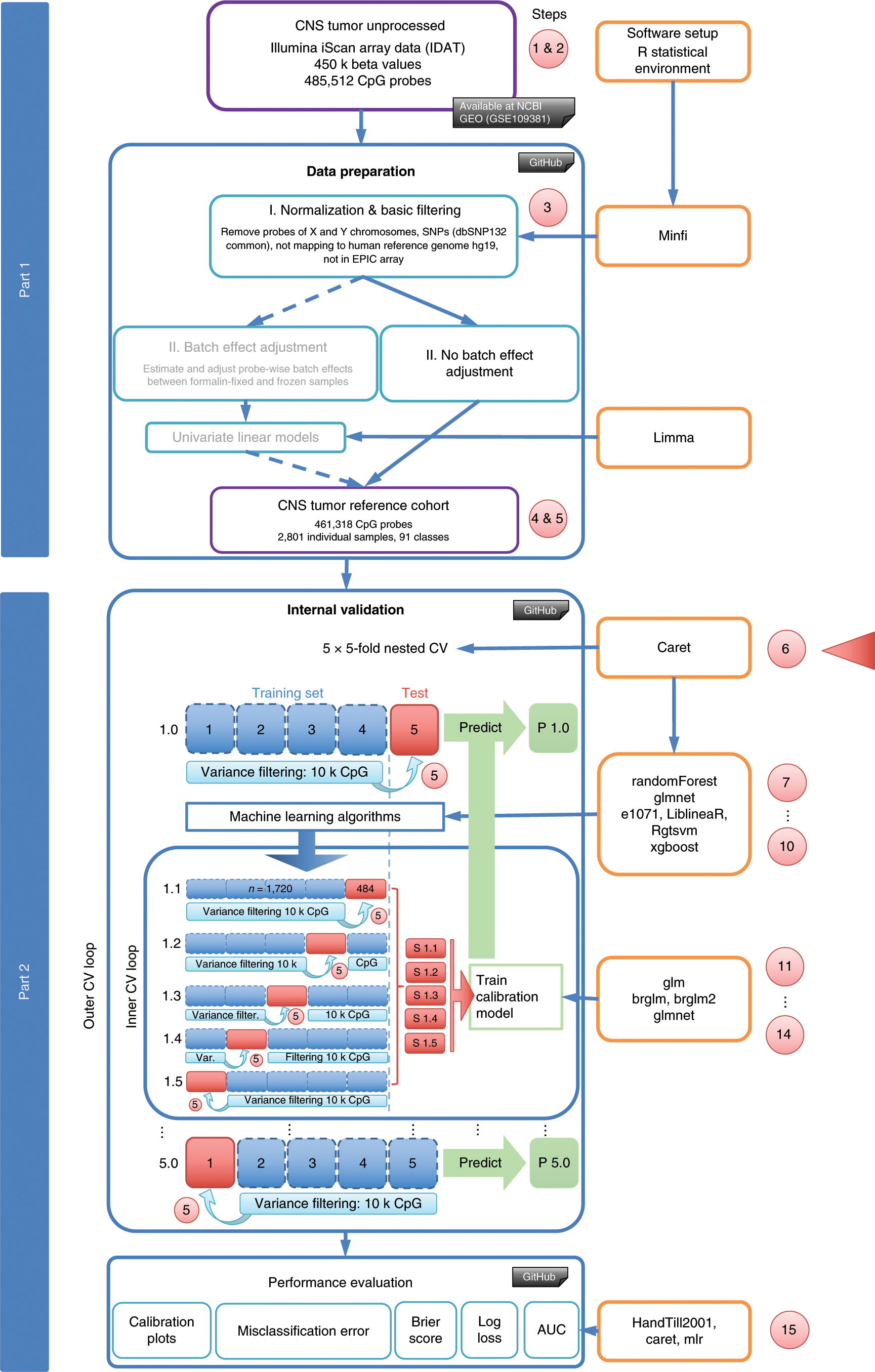

If users would like to cross validate alpha as well they should call cvglmnet with a pre computed vector foldid and then use this same fold vector in separate calls to cvglmnet with different values of alpha. We can do this using the built in cross validation function cvglmnet.

Solution Day 6 Regularization

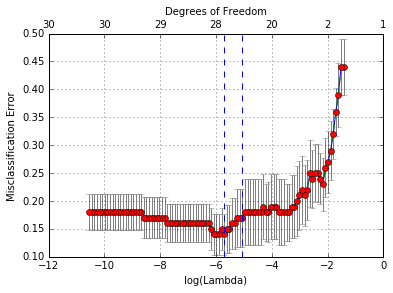

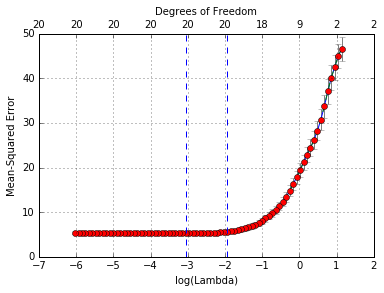

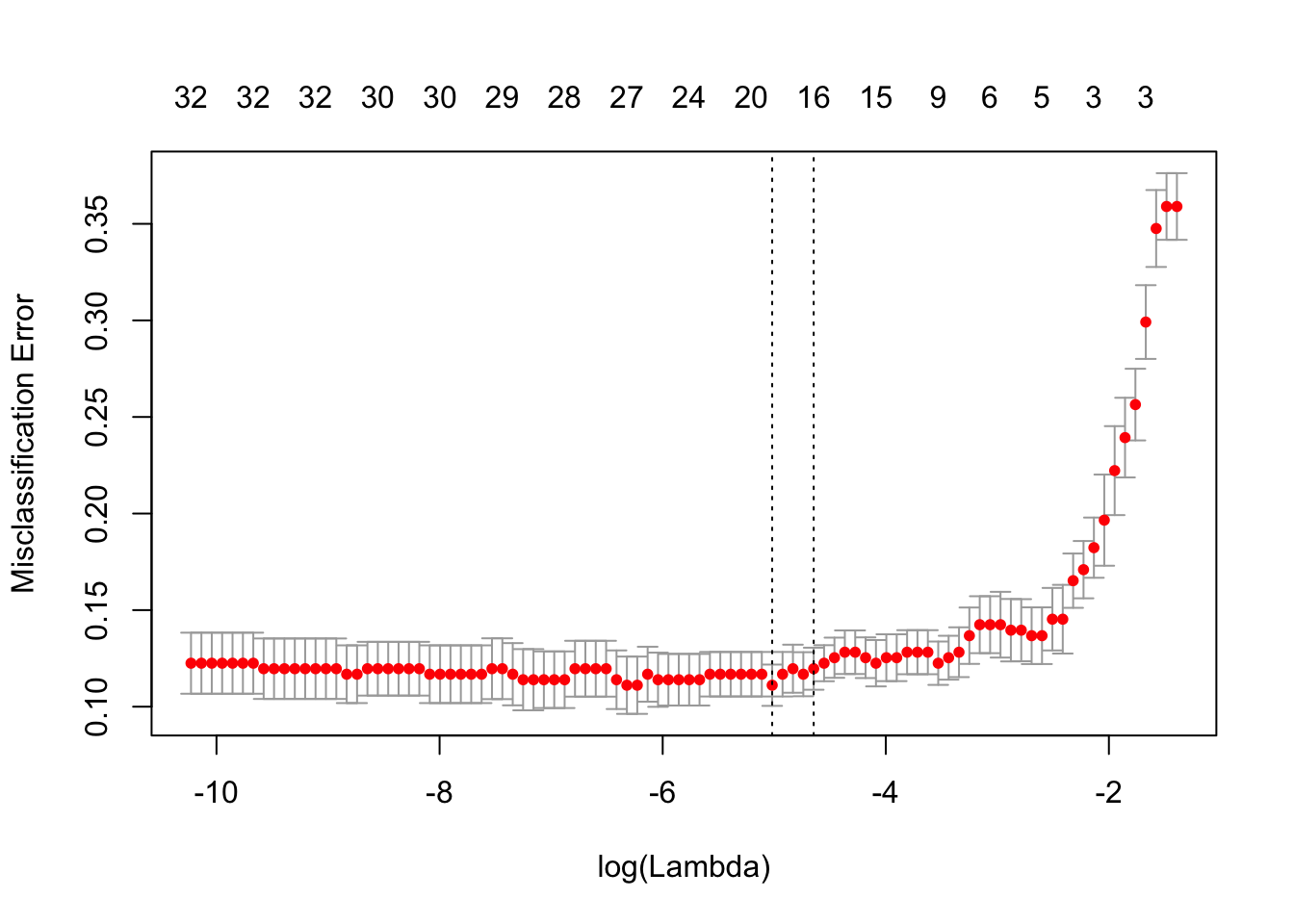

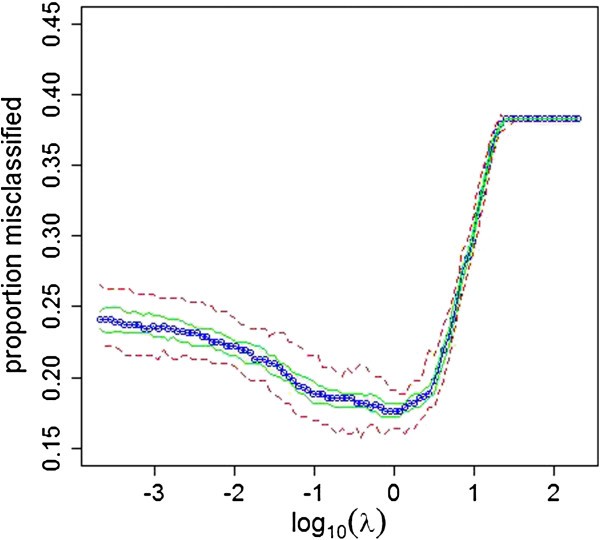

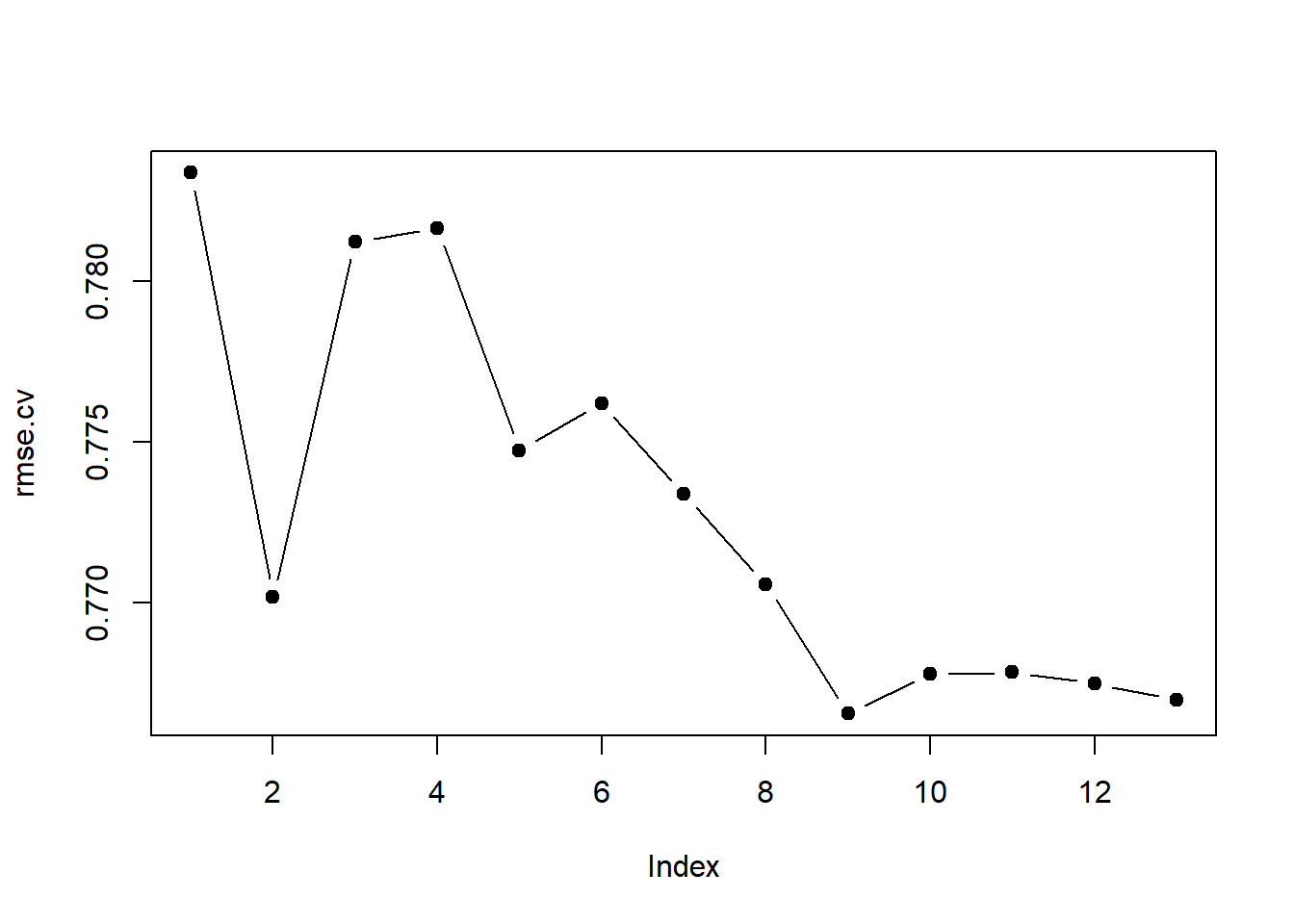

Cv error rate glmnet. Other options in glmnet are ridge regression and elasticnet regression. An object of class cvglmnet is returned which is a list with the ingredients of the cross validation fit. How to extract the cv errors for optimal lambda using glmnet package. The optimal value of lambda is determined based on n fold cross validation. Ask question asked 6 years 2 months ago. Cvglmnet returns a cvglmnet object which is cvfit here a list with all the ingredients of the cross validation fit.

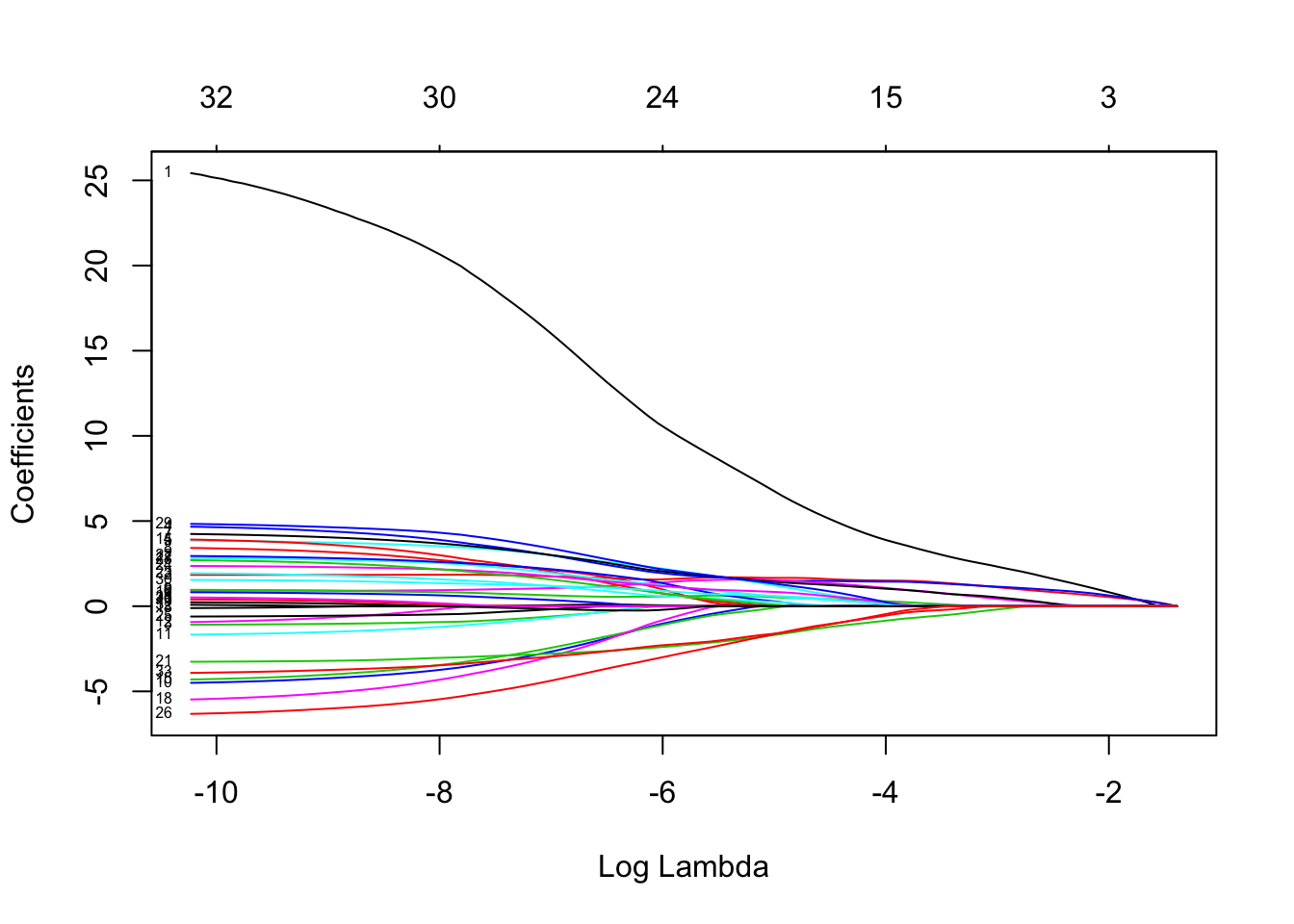

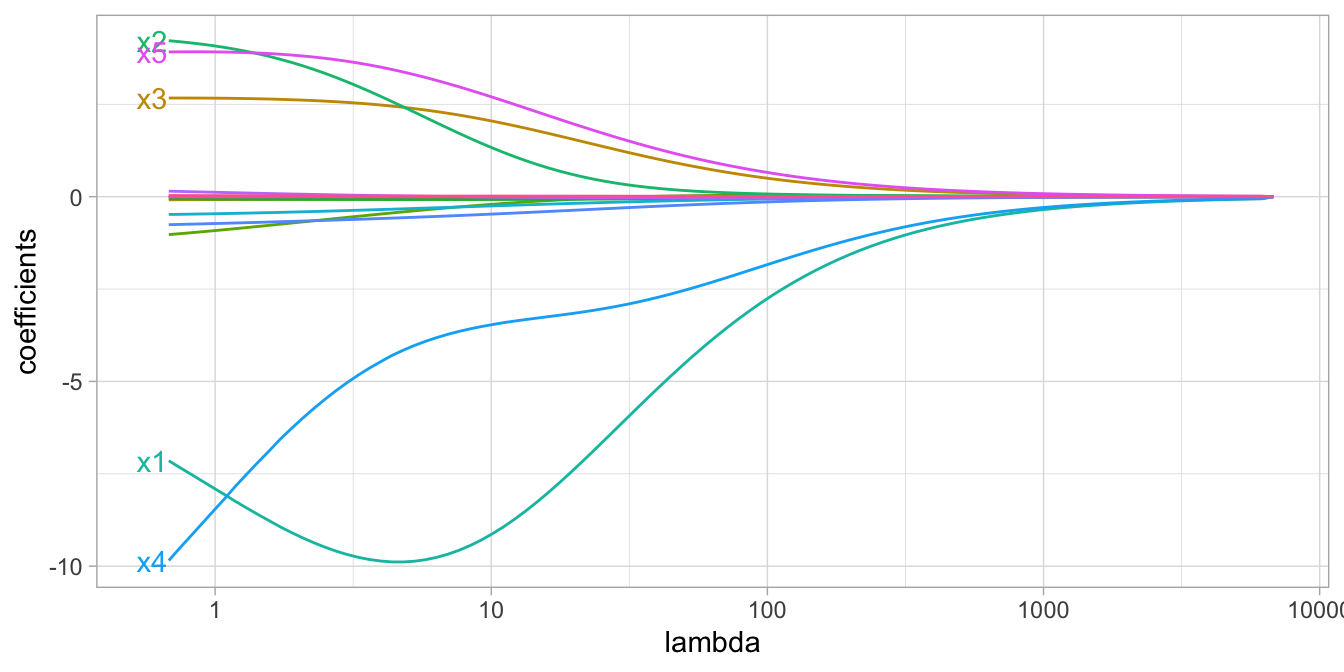

Gets the coefficient values and variable names from a model. Required for confusion. If users would like to cross validate alpha as well they should call cvglmnet with a pre computed vector foldid and then use this same fold vector in separate calls to cvglmnet with different values of alpha. The values of lambda used in the fits. By default the function performs 10 fold cross validation though this can be changed using the argument folds. Object fitted glmnetor cvglmnet relaxedor cvrelaxedobject or a ma trix of predictions for rocglmnet or assessglmnet.

A specific value should be supplied else alpha1 is assumed by default. Please be sure to answer the questionprovide details and share your research. Note that cvglmnet does not search for values for alpha. Note that cvglmnet does not search for values for alpha. Thanks for contributing an answer to stack overflow. The magnitude of the penalty is set by the parameter lambda.

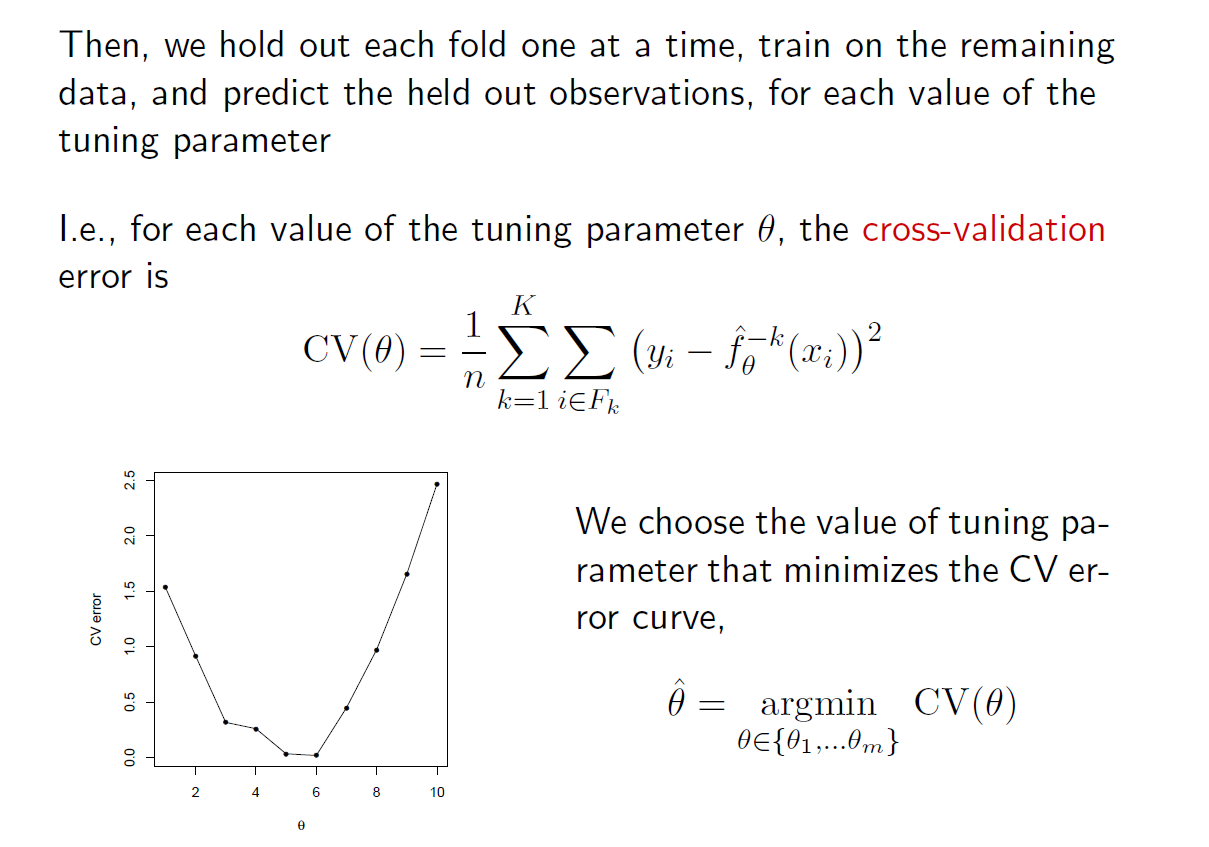

For rocglmnet the model must be a binomial and for confusionglmnet must be either bino mial or multinomial newx if predictions are to made these are the x values. The coefficients that you get from cvglmnet are the coefficients that remain after application of the lasso penalty lasso is the default. A specific value should be supplied else alpha1 is assumed by default. Deviance or mse uses squared loss mae uses mean absolute error. Instead of arbitrarily choosing lambda 4 it would be better to use cross validation to choose the tuning parameter lambda. As for glmnet we do not encourage users to extract the components directly except for viewing the selected values of lambda.

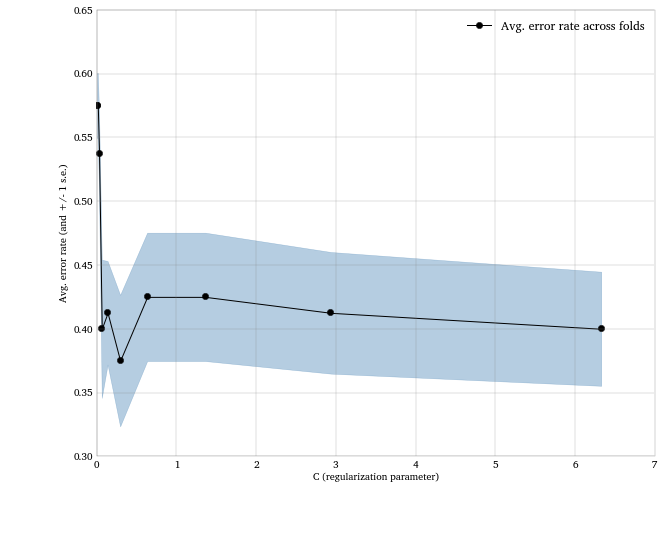

In addition to all the glmnet parameters cvglmnet has its special parameters including nfolds the number of folds foldid user supplied folds typemeasurethe loss used for cross validation. Since glmnet does not have standard errors those will just be na. Asking for help clarification or responding to other answers. As an example cvfit cvglmnetx y typemeasure mse.